A VR-Based Approach to Evaluating Dizziness in Emergency Settings

As a senior, I wanted a capstone experience that brought together my passions for emerging technology and cognitive science. Through my work at Studio X, where I explored virtual reality (VR) development on projects like Aurum VR and URMC Motion Labs, and my academic background in Brain and Cognitive Sciences, I was already immersed in both the technical and research sides of this field. A collaboration between Studio X and the Movement and Plasticity Lab (MAPL) offered the perfect opportunity to merge these interests in a meaningful, applied way.

About the MAPL Lab

Stroke is one of the leading causes of adult disability in the U.S., with approximately 40% of survivors experiencing long-term motor impairments. The MAPL Lab focuses on improving post-stroke rehabilitation by identifying biomarkers that can predict long-term outcomes. Using cutting-edge tools like electromyographic interfaces and biometric sensors, the lab seeks to better understand and treat motor control deficits.

Project Goal and Background

One of the most difficult stroke presentations to diagnose is sudden-onset dizziness or vertigo. While new-onset vertigo accounts for nearly 4% of all Emergency Department (ED) visits, only a small portion of these cases are due to stroke (central vertigo). Accurately identifying those critical cases quickly is vital, but current diagnostic methods rely heavily on clinical expertise and expensive imaging. Even with trained staff, the subtle differences during a bedside exam often lead to misdiagnoses.

To address this gap, MAPL reached out to Studio X, which connected them with me—one of its trained student employees—to help develop a VR-based assessment system designed to rapidly and objectively differentiate between central (stroke-related) and peripheral (non-stroke) causes of vertigo. Instead of relying on subjective tests, like having a patient follow a physician’s finger, we use immersive VR combined with eye and head tracking to recreate those exams in a more controlled, measurable way. Our goal is to build a low-cost, scalable tool that can be used in any emergency setting—even when a neurologist isn’t available.

Methodology

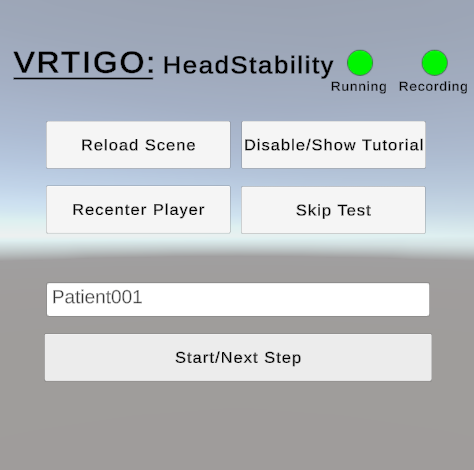

I led the development of the VR assessment platform, focusing on six core diagnostic tasks adapted from traditional clinical exams. All of these tests were created in Unity and backed by previous research. The core focus was to create a system that can be used and monitored live and quickly. The six tests created are:

- Head Stability Test

The participant fixates on a virtual point in space. We track subtle sway and movement through the VR headset, which reflects head and trunk stability. Bucket Test

A virtual adaptation of the traditional bucket test that asks the patient to align a line as vertically as possible without any background indicators. Participants will use the controller joystick to rotate the line in the middle.

Nystagmus Detection

Participants follow a moving visual target while eye tracking detects involuntary oscillations in eye movement—key indicators of vestibular dysfunction.

- Test of Skew

Based on the cover-uncover test, this task alternately blocks visual input to one eye to detect alignment shifts and disconjugate gaze.

- Finger Tapping Test

Participants quickly pinch their thumb and index finger together. This assesses the speed and rhythm of movement, a sign of cerebellar coordination.

- Finger to Target Test

Participants are asked to reach for a visual target using their finger, which may be visible or invisible, to assess dysmetria and spatial coordination in space.

These tests record data, including head and eye tracking, hand positions, and test-specific variables. In addition to the assessments, a manual control system was developed so the researcher could control the system in real time. This includes how to organize data, specific test functions, and indicators to know when the test is running and recording information.

What’s Next?

We're preparing to conduct user testing with both healthy individuals and those with a history of stroke or vestibular symptoms. Participants will complete both the VR battery and a standard neurological exam. This phase will help us evaluate the feasibility, reliability, and clinical validity of our VR tool in real-world ED settings.

By analyzing data from eye tracking, hand movement, and postural stability, we aim to detect specific patterns associated with vertigo and uncover novel markers for stroke-related symptoms.

Acknowledgments

I want to recognize the Del Monte Institute for Neuroscience for funding the project. A huge thank you to the MAPL lab and Dr. Busza for her guidance. I also want to thank Studio X for the opportunity, along with the help from XR developers Yvie Zhang and Fenway Powers for their support.

About this Author

Josh Jones, XR Specialist & 3D Modeling Team Lead

Photography by Hammer Chen