A collaborative time-based media annotation tool for the web

Mediate is a collaborative time-based media annotation tool developed by River Campus Libraries and Joel Burges, Associate Professor, Department of English, Film and Media Studies, Digital Media Studies, and Director of the Graduate Program in Visual and Cultural Studies at University of Rochester.

Re-imagining Annotation

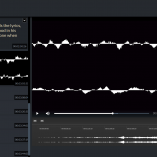

Media literacy is one of the most pressing concerns for research and teaching due to the centrality of multi-modal content—images, sounds, and text—in our culture. From film and television to video games, music videos, social media, music, and podcasts, multimodal content is ubiquitous in our everyday lives. Yet education still focuses primarily on text-based literacies. Mediate, a web-based platform that allows users to annotate multimedia content, tackles this problem by providing a means for individual or collective inquiry into time-based media. Users can to upload video or audio, generate automated markers, annotate their content on the basis of customizable schema, produce real-time notes, and export their data to generate visualizations.

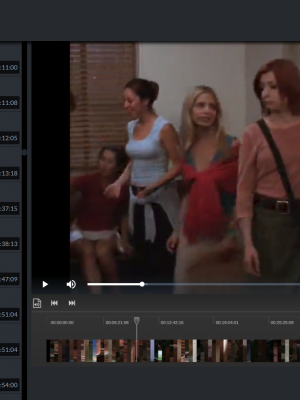

The annotation interface is made up of an annotation panel, timeline, and video player. The panel includes: thumbnails, marker type, and time code. The annotation panel syncs with the timeline and allows users to scrub through the video.

Once the annotation process is finished, users have a wealth of preserved data and observations about the media. Past students have used that data to create visualizations based on their observations, using the visualizations to test hypotheses and produce interpretations of multimodal content. The utility of Mediate ranges from students learning to close-read time-based media narratively, visually, and sonically to scholars working individually or collectively to reevaluate central questions in the history of moving images or sound recording at both microscopic and macroscopic scales.

Sample Use Cases

Since 2012, Mediate has been used in eighteen courses, reaching over 400 students. While use cases continue to grow, Mediate has been used primarily for courses in Film & Media Studies, Linguistics, and Music. We continue to seek other use cases, including looking at the potential for using Mediate to crowdsource identification and information on local historical film archives.

To read more about current use cases, please see a recent publication in Digital Humanities Quarterly, “Audiovisualities out of Annotation: Three Case Studies in Teaching Digital Annotation with Mediate” (March 2021). Below are two sample annotation interfaces for linguistics and music.

Technical Specs

Mediate is a web-enabled software platform built in Python and JavaScript. It makes use of several popular and well-maintained open source libraries, including Django for its server-side application, OpenCV for computer vision and image processing, FFMPEG for multimedia transcoding, and ReactJS for its client-side application. Mediate is designed to handle large sets of annotations (which we also call “markers”), and supports concurrent updates through websockets, enabling a truly collaborative real-time system composed of several modules all tied together by a RESTful API. Through the API, users are able to create custom tags, upload media, create groups, add annotations, and query data in CSV or JSON formats. All uploaded video files and resulting derivatives are currently stored in a secure, aliased directory, and are only available to those users who have sufficient permissions to view them. In addition to the images and video files produced through the video processing pipeline, all annotations and relevant metadata are stored in a secure PostgreSQL database and backed up regularly. Since Mediate is not designed as a digital asset management or preservation system, original uploads are removed from the server once the derivatives have been successfully created.

What’s Next?

Mediate has entered the second phase of development. Based on student feedback, Josh Romphf has made substantial changes to the user interface. In spring 2019, we ran student focus groups on Mediate in order to gain further insight to help prioritize future features. In fall of 2019, undergraduate researcher assistant Tiamat Fox worked with the project team to develop more robust documentation and synthesize feedback from the expanded use cases. Much of 2020 was spent developing a more user-friendly administrative interface, which is now ready to be tested. Moving forward, we are looking at developing more robust data querying along with roles and permissions, the capacity for location-based marking, durative markers, IIIF presentation API 3.0 support, and in-platform data visualizations.

Receive Updates

Sign-up to receive updates about Mediate. In the coming year, we will reach out to colleagues at other institutions to test the new administrative interface and possibly see if there is interest from the community in using Mediate in a research project or course.

Project Team

Platform Development

- Joel Burges, Principal Investigator, Associate Professor, Department of English, Film and Media Studies, Digital Media Studies, and Director of the Graduate Program in Visual and Cultural Studies, College of Arts, Sciences, and Engineering

- Josh Romphf, Lead Designer and Developer, Digital Scholarship Programmer, Digital Scholarship Lab, River Campus Libraries

- Emily Sherwood, Project Manager, Director, Digital Scholarship Lab, River Campus Libraries

- Joe Easterly, Digital Scholarship Librarian, Digital Scholarship Lab, River Campus Libraries

- Maurini Strub, Director of Assessment, River Campus Libraries

Project Collaborators

- Darren Mueller, Assistant Professor, Musicology, Eastman School of Music

- Solveiga Armoskaite, Assistant Professor, Linguistics, College of Arts, Sciences, and Engineering

- Joanne Bernardi, Professor, Japanese and Film and Media Studies, College of Arts, Sciences, and Engineering

- Patrick Sullivan, Doctoral Candidate, Visual and Cultural Studies, College of Arts, Sciences, and Engineering

- Maddie Ullrich, PhD student in Visual and Cultural Studies, Mellon Digital Humanities Fellow, College of Arts, Sciences, and Engineering

- Byron Fong, PhD student in Visual and Cultural Studies, Mellon Digital Humanities Fellow, College of Arts, Scines, and Engineering

Previous Collaborators

The project team owes a debt to those who worked on the early stages of development, including:

- Nora Dimmock, former Project Manager and Assistant Dean, River Campus Libraries; currently, Deputy University Librarian, Brown University

- Tom Downey, former Library Network Systems Administrator, Library IT Services, River Campus Libraries; currently, Infrastructure Engineer, Springshare

- Sean Morris, former Interface Designer, Information Discovery Team, River Campus Libraries

- Nathan Sarr, former Web Applications Programmer, Information Discovery Team, River Campus Libraries

- Ralph Arbelo, Library Network Systems Administrator, Library IT Services, River Campus Libraries

- Stephen Alexander, Library Network Systems Administrator, Library IT Services, River Campus Libraries

- Tiamat Fox, Undergraduate Research Assistant, College of Arts, Sciences, and Engineering